The First International AI Safety Report

A Deep Dive into Risks, Challenges, and Future Trajectories

What was once a specialized technology area called Artificial Intelligence (AI) has now become a powerful driver of change throughout industries and government operations. The growing capabilities of AI systems generate increased concerns over safety risks and ethical implications. The 2025 International AI Safety Report reflects international collaboration to evaluate AI risks through a systematic approach. The article explores the principal conclusions of the report while providing technical analyses and examining future implications alongside existing challenges.

Understanding the Report’s Scope

The International AI Safety Report emerges from joint research efforts between 30 countries and international organizations such as the OECD, EU, and UN with contributions from AI experts. General-purpose AI (GPAI) systems that perform multiple tasks including text generation and scientific analysis form the central area of study in this report. The report focuses on examining AI's present capabilities, finding linked risks and looking at effective mitigation methods.

AI's Rapid Evolution and Capabilities

The report's most prominent finding reveals AI's rapid progression in proficiency. Artificial Intelligence now excels in programming tasks along with scientific reasoning and strategic planning due to its progression beyond basic response generation. The report emphasizes AI models have achieved better results in scientific analysis and problem-solving as companies invest more in autonomous AI agents. Experts remain unsure about how AI will scale in the future while debates continue whether advancements will maintain the current speed or demand new methods beyond expanded data and computing power.

Emerging AI Risks

The report categorizes AI-related risks into three key areas:

Malicious use: AI technology is used maliciously to distribute false information while producing deepfake content and enabling cyber threats. These capabilities present problems related to public perception manipulation and cybersecurity vulnerability. The dangers increase when AI technology gets misused to create dangerous biological weapons.

Malfunctions: AI systems still struggle with hallucinations and misinformation while carrying unintended biases even though continuous efforts are being made to enhance their reliability. Experts worry about losing control of AI behaviors as autonomous systems continue to advance.

Systemic risks: Labor markets face threats because job automation increases which triggers widespread job displacement concerns. A limited number of countries dominate AI research and development which could worsen economic disparities between nations. AI development consumes large amounts of energy which presents environmental sustainability challenges while AI’s ability to handle massive user data volumes intensifies privacy-related issues.

Managing AI Risks

The report highlights several obstacles in AI risk management:

Lack of transparency: Most AI systems function as "black boxes" which obscures their decision-making processes from full comprehension.

Regulatory challenges: The quick progress of AI technology creates difficulties for regulatory bodies trying to develop proper frameworks.

Competitive pressures: The competitive nature of the AI industry pushes companies to focus on quick release times rather than safety protocols.

Despite these challenges, researchers are actively exploring methods to mitigate AI risks, including:

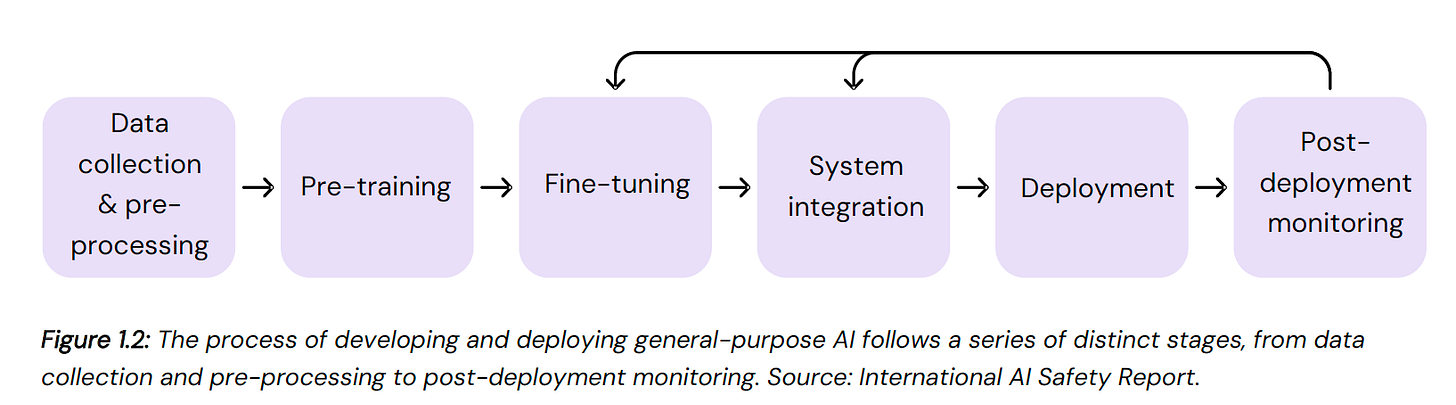

Methods used to guide AI models according to predetermined human ethical standards and safety boundaries. Researchers use adversarial training to find and fix vulnerabilities before AI systems are deployed. Real-time systems that monitor and intervene to manage AI risks which arise after deployment.

Global Policy Considerations

According to the report international collaboration remains critical for effective AI governance. Key policy initiatives being considered include:

Developing AI safety standards to enable responsible AI deployment throughout various jurisdictions.

Detection systems must identify developing AI threats early to prevent them from escalating into significant dangers.

AI developers must adhere to transparency requirements which build accountability and trust.

Collaborative public-private partnerships connect regulatory agencies with industry executives and civil society groups.

Looking Ahead

The International AI Safety Report 2025 functions as an initial framework for later research and policy debates but does not serve as an exact plan. Human societies stand at a critical decision point to either advance through AI utilization or let unregulated dangers spread. The worldwide AI community needs to unite their efforts to create equilibrium between technological advancement and risk management.

The International AI Safety Report 2025 delivers a powerful request for immediate action. The report reveals AI's enormous potential alongside serious risks and encourages policymakers and industry experts to act deliberately. Human decisions will determine what AI's future becomes. Protecting humanity from AI threats demands transparent governance and responsible development alongside ethical decision-making so AI serves humanity without becoming an uncontrolled technological risk.Reference: https://www.gov.uk/government/publications/international-ai-safety-report-2025